Abstract

Metal-organic frameworks (MOFs) are an incredibly diverse group of highly porous hybrid materials, which are interesting for a wide range of possible applications. For a meaningful theoretical description of many of their properties accurate and computationally highly efficient methods are in high demand. These would avoid compromises regarding either the quality of modelling results or the level of complexity of the calculated properties. With the advent of machine learning approaches, it is now possible to generate such approaches with relatively little human effort. Here, we build on existing types of machine-learned force fields belonging to the moment-tensor and kernel-based potential families to develop a recipe for their efficient parametrization. This yields exceptionally accurate and computationally highly efficient force fields. The parametrization relies on reference configurations generated during molecular dynamics based, active learning runs. The performance of the potentials is benchmarked for a representative selection of commonly studied MOFs revealing a close to DFT accuracy in predicting forces and structural parameters for a set of validation structures. The same applies to elastic constants and phonon band structures. Additionally, for MOF-5 the thermal conductivity is obtained with full quantitative agreement to single-crystal experiments. All this is possible while maintaining a very high degree of computational efficiency. The exceptional accuracy of the parameterized force field potentials combined with their computational efficiency has the potential of lifting the computational modelling of MOFs to the next level.

Similar content being viewed by others

Introduction

Metal-organic frameworks (MOFs) are highly porous hybrid materials consisting of inorganic metal oxide nodes connected by organic linkers. Since their first realization, a wide range of potential applications has been discussed including catalysis1,2,3, gas storage and separation4,5,6, electronic devices7,8,9, as well as drug encapsulation and delivery10,11. In view of the seemingly limitless number of possible MOFs, finding the material with properties best suited for a specific application is a sizable challenge. The situation is further complicated by the frequently encountered difficulties when trying to measure the relevant materials parameters, e.g., due to often small crystallite sizes or the inclusion of foreign molecules in the MOF pores. Therefore, computational methods provide an excellent tool to not only predict properties but also to support the development of dependable structure-to-property-relationships. Due to the large number of atoms in MOF unit cells compared to conventional crystalline materials, ab-initio methods like density-functional theory (DFT) are frequently too computationally expensive for that task. This is particularly true when modelling properties at elevated temperatures or for properties that depend on the dynamics of the MOF constituents, like thermal expansion or thermal conductivity. Then it is necessary to resort to lower levels of theory, like classical force field potentials (FFPs), which can speed up calculations by many orders of magnitude12,13,14,15,16, albeit at the price of a significantly reduced accuracy.

The most commonly applied and most easy to use force field potentials are transferable force-fields, which can be straightforwardly applied to modelling the majority of the elements in the periodic table. These include the Dreiding17 force field or the universal force field (UFF)18, where for the latter a variant exists that is specifically adapted for the description of MOFs (UFF4MOF)19,20,21. Especially UFF4MOF has been frequently used in literature for modelling MOFs22,23,24,25, as it is readily available and particularly convenient for rapid structure prediction20. Such highly transferable potentials are, however, not designed for an accurate description of dynamical properties20 and usually result in sizable errors. In the case of MOFs, poor agreement between UFF4MOF-based simulations and DFT-based methods were explicitly demonstrated, for example, for the elastic properties of MIL-5326 and for the thermal conductivity of MOF-5. In the latter case, UFF4MOF overestimated the single-crystal derived experimental data by a factor of 2.627. More advanced potentials focus on a more accurate description of a particular materials class, like GAFF28 or COMPASS29 for organic molecules or BTW-FF13 for MOFs. However, even these potentials with reduced transferability tend to have difficulties in accurately predicting vibrational properties30, which are notoriously challenging to describe31, but which crucially influence many materials parameters.

A higher degree of accuracy (at the price of a further reduced transferability) is observed for potentials individually parameterized for specific molecular fragments. This, for example, applies to part of the MOF-FF family of potentials, which are parameterized for specific organic and inorganic MOF building blocks12,32. Potentials like MOF-FF12,27,33 or QuickFF15 can also be parameterized fully system-specifically giving up on transferability to achieve an even higher degree of precision. The functional form of these force fields is designed relying on chemical intuition, for example, distinguishing between bonding and non-bonding interactions, which is not always ideal for MOFs, in which the dynamic adsorption and desorption of guest molecules can be important. This triggered the development of more flexible potentials like ReaxFF34, but often at the price of an overall reduced accuracy combined with problems regarding energy conservation and thermal stability35. Another (major) disadvantage that we experienced when system-specifically parametrizing classical force fields like MOF-FF was that for achieving the required level of accuracy (compared to DFT and experimental data) a careful tailoring of the chosen set of potential terms, reference data, and fitting algorithms is often crucial27,33. This makes the parametrization process extremely cumbersome.

With increasing computational power and advances in machine learning approaches, more convenient to use and often more accurate machine learned potentials (MLPs) emerged. In recent years, they started to see widespread use for successfully describing many materials36,37,38,39. So far, for MOFs, MLP approaches have been largely limited to neural network potentials, which already show a promising performance in terms of precision26,40,41,42,43,44,45,46. They were employed to reliably describe computationally expensive quantities like the thermal conductivity of several MOFs44. However, many of the said investigations required a relatively large number of DFT reference data for training40,45 and neural network potentials are still computationally less efficient than traditional FFPs when tested on the same computer architecture40,47. In this context it should be noted that some of the implementations of these potentials like GPUMD48 and DeePMD-kit49 feature parallelization on graphical processing units, making them also an attractive option for large simulations44,48. Other types of machine learned potentials like Gaussian approximation potentials (GAPs)50 or moment tensor potentials (MTPs)51 have seen widespread use for conventional materials52,53,54, but their performance has, to the best of our knowledge, not yet been evaluated systematically for MOFs. Importantly, the sizable amount of DFT reference data required for machine learning based approaches can be cut down significantly by either only training molecular fragments of a system40,41,55 or by employing strategies to more efficiently sample phase space26,56.

This raises the questions, how machine learned potentials can be most efficiently used for modelling MOFs, how they can be efficiently parametrized, how they perform for describing elastic and phonon-related properties, and to what extent their more complex form slows down the computations compared to traditional force field potentials. To answer these questions, we employed existing implementations of machine learned potentials. This makes the presented approach as widely applicable as possible, as these tools can be used essentially out-of-the-box with little human development effort required. In particular, we will focus on the kernel-based potentials (derivatives of GAPs) available within the wide-spread Vienna ab-initio Simulation Package (VASP)57, which we will refer to as VASP MLPs, and on the MTPs implemented within the freely available MLIP58 (machine learning interatomic potential) package. By efficiently combining both methods, we will present an efficient strategy for obtaining machine learned force field potentials, which allow the description of the structural and dynamic properties of MOFs at a level of quality that hitherto has been limited to quantum-mechanical simulations. In that spirit, the goal of the present work is not to develop a new type of machine-learned force field, but to efficiently apply the combination of two particularly promising approaches described in literature to the materials class of MOFs. In this way, an easy to implement roadmap is provided for how to lift the computational modelling of structural and dynamic MOF properties to the next level.

Results

Overview of the computational approach

In this section, the fundamental aspects of the proposed approach for generating highly efficient machine-learned potentials for modelling MOFs are described. It also contains details necessary for understanding the discussion of the obtained results. The more technical aspects of the theoretical approaches and details on the applied procedures that are not of immediate relevance for understanding the presented results are summarized in the Methods section, which will, thus, be referred to whenever appropriate. Further details are provided in the Supplementary Information.

Active learning approaches are a particularly promising strategy for sampling phase space to generate reference structures needed for learning force field potentials. However, there have only been few applications of such approaches to MOFs26; moreover, especially in early implementations the training still demanded a rather high number of DFT calculations45. A strategy that has the potential to severely cut down the required computational effort is available in conjunction with a kernel-based MLP in the VASP code. A detailed description of that approach is provided in ref. 56,59. In the following, the key elements of the approach shall be summarized briefly to put the employed strategy into perspective: the active learning approach in VASP trains a kernel-based machine learned potential in the course of a molecular dynamics (MD) run. The potential is built from a basis set depending on local reference configurations (atoms with their surrounding neighbourhoods), which is dynamically expanded during the simulation56,57,60 and which defines the possible parameters for the potential. When the estimated Bayesian error of the forces in a specific MD step exceeds a given threshold, the concurrent force field is not considered accurate enough to continue the molecular dynamics simulation. In that case, the energies, forces, and stresses for the current time step are recalculated using DFT. These DFT calculations are also used to expand the set of reference data, from which the local reference configurations for the various atoms are selected, as described in the Methods section. The accuracy threshold is dynamically adjusted in the course of the molecular dynamics simulation based on the average of the 10 previous Bayesian errors estimates at time steps at which the force field was retrained56. This leads to larger thresholds at higher temperatures, where the absolute values of the forces are higher. Such an adjustment is especially required when training with a temperature gradient, as a fixed threshold would add too few configurations at low temperatures and too many configurations at high temperatures. When enough new reference data have been accumulated, the basis set of the force field is updated with new local reference configurations, as explained in more detail in the Methods section. This approach has already been successfully employed to obtain accurate potentials for a range of materials56,57,60. A possible drawback is that Gaussian approximation potentials have the reputation of being comparably slow47, although, strictly speaking, this has been shown for other variants than the VASP MLPs used here. Moreover, advancements in the field of machine learned potentials occur at a rapid pace potentially also affecting their computational efficiency. This is exemplified by recent implementations of VASP (version 6.4.0 and upward), which promise substantial improvements of the computational efficiency of the used potentials. As described in the ’Evaluating the efficiency of the potentials‘ section, the latter is achieved by technical improvements of the implementation and a reduction of the computational overhead during production runs compared to the generation of training data.

Still, the aforementioned moment tensor potentials have been shown to lead to a particularly high accuracy-to-cost ratio in force-field based simulations (compared, for example, to GAPs)47. Therefore, it appears useful to test, whether they could provide an even improved performance compared also to the latest VASP MLP variants. To ease the understanding of the later discussions, the key properties of MTPs are summarized in the following. Again, more information is provided in the methods section and a detailed account of the underlying theory of MTPs can be found in the seminal paper by Shapeev et al.51. Unlike the VASP MLPs, MTPs describe the atomic energies based on a fixed basis set with a static number of parameters independent of the reference data used. The a priori choice of that basis set allows a more straightforward adjustment of their accuracy and speed51,58. On more technical grounds, the basis sets of MTPs are built from moment tensor descriptors consisting of a radial and an angular part. The radial part is represented by a series of polynomials up to a given order, while the angular part is represented by a tensor up to a certain rank, which primarily determines the many-body character of the potential. Combinations of those moment tensors then define the basis functions, for which the parameters are trained. How many combinations of moment tensors are included in the potential, and, therefore, to what extent many-body interactions are considered, is defined by a ’level’ parameter, which represents the primary way to adjust the speed and accuracy of an MTP. A second parameter to be chosen by the user is the radial basis set size, which defines the maximum order of the used polynomials. Increasing the MTP level and the basis set size leads to an increased accuracy of the MTP, but this comes at the price of a reduced computational efficiency. Unfortunately, for complex systems MTPs are not well compatible with active-learning strategies, like the one sketched above, as the required frequent reparameterization of the MTPs in MLIP becomes very time consuming. This is a consequence of the different mathematical strategies necessary for determining the parameters of the potentials (see Methods section). Further details can also be found in Supplementary Note 2.2.

Another relevant choice regarding the functional form of the MTPs and VASP MLPs is, how many chemically different species are considered in their parametrization. The minimum number of such atom types is given by the number of different chemical elements present in the material of interest. Larger numbers of atom types are obtained when also differentiating between identical elements in different chemical environments. This separation of atom types increases the number of parameters in the potential and allows to describe variations in chemical environments more accurately. However, the computational cost is adversely affected due to the larger required basis set. This increase in computational costs is significantly larger for the VASP MLP than for the MTP, which is related to their functional form, as discussed in the methods section.

As default approach for generating the data presented below, we generated separate parameters for atoms in different chemical environments, and, thus, employed a ‘full’ separation of atom types with further details provided in Supplementary Note 2. In selected cases, to better assess the trade-off between performance and efficiency, we also performed parametrization runs choosing only a single atom type per element.

Strategy: parametrization of the potentials

The parametrization of the MTPs and VASP MLPs requires DFT calculated total energies, forces on atoms, and stresses on unit cells for a sufficiently large number of reference configurations. The necessary reference structures were generated employing the on-the-fly machine-learning force field methodology implemented in VASP56,59, whose conceptual approach has been outlined in the previous section. Details of the applied procedure like the chosen temperature intervals and simulation lengths were optimized in prior tests based on MOF-561. In short, the 0 K optimized structures of the respective systems containing between 54 and 114 atoms in the unit cell were used as starting geometries. A molecular dynamics (MD) simulation was initiated in an NPT ensemble using a Parrinello-Rahman barostat62 at zero pressure in combination with a Langevin thermostat63 starting at 50 K and heating the system up to 900 K over the course of 50,000 time steps of 0.5 fs. Despite the temperature of interest being around room temperature, we chose a rather high maximum temperature of 900 K, because including larger atomic displacement amplitudes at higher temperatures in the reference data set is beneficial for machine learned potentials. This a consequence of such potentials performing well in an interpolation regime, while showing a poor ability to generalize to unknown situations58.

To assess a potentially beneficial impact of increasing the size of the reference data sets, we also created ‘extended reference data sets’ by performing additional learning runs in an NPT ensemble at a constant temperature of 300 K for 100,000 time steps starting from the already obtained potential. Here, the Bayesian error threshold was fixed at a value of 0.02 eVÅ−1 for all systems, which is slightly lower than the threshold value at 300 K during the training runs with continuous heating.

Besides generating reference structures, the above procedure (either including or excluding the ‘extended reference data set’) already produces first versions of the VASP MLPs. Nevertheless, the sampled reference data were used to retrain new VASP MLPs for the production runs. This was done to increase their level of accuracy with optimized settings for the selection of local reference configurations and to increase the computational efficiency by generating potentials excluding the on-the-fly error prediction. The exact details regarding the used settings during the retraining can be found in the Methods section.

In a next step, the DFT reference data generated in the VASP active learning runs were used to train also moment tensor potentials51, similar to the approach applied already in ref. 64 to interfaces. The fitting of the MTP parameters was achieved by minimizing a cost function built from deviations in energies, forces, and stresses between DFT and MTP-predicted data and employing the MLIP package58. Due to the stochastic nature of the initialization of the MTP fits, we performed several fits for each data set. The optimal potential for the respective system was then chosen based on its description of a validation set of reference structures. The associated technical details and numerical settings are again provided in the Methods section including a discussion of the computational cost of the fitting process. A further benefit of using the same reference data sets for the MTPs and VASP MLPs is that then one avoids any reference-bias, which allows a more rigorous comparison of the performance of the different machine learned potentials.

Strategy: benchmarking of the potentials

To demonstrate that the proposed strategy is truly useful, it is crucial to benchmark the obtained potentials for a sufficiently large range of materials and physical properties. Therefore, we tested the VASP MLP and the MTP approaches on several complementary MOF structures extensively described in literature and discussed in more detail in the next section. Here, it should be mentioned that the focus of the present paper will be on the properties of chemically stable and homogeneous materials. Therefore, at this stage, we do not aim at modelling, e.g., chemical reactions, or the diffusion of guest molecules or solvents. Thus, quantities of interest for the benchmarking comprise static and dynamic structural properties of MOFs and derived quantities. These include unit-cell parameters, energies, forces, stresses on strained cells, elastic constants, thermal expansion coefficients, and thermal conductivities. Of major relevance for several of those properties are phonons, where we will not limit ourselves to the Γ-point but will rather study phonons in the entire first Brillouin zone. This is important, as properties like the thermodynamic stability65, as well as heat and charge-transport properties crucially depend on off-Γ phonons66.

The benchmarking will be done primarily via a comparison to reference data generated by DFT and only secondarily via a comparison to experimental data (e.g., when a quantity of interest is not accessible to DFT due to its computational complexity). The reason for that is that the goal of the present study is to assess the quality of the parametrization of the potentials and the suitability of their functional form. A comparison to experiments would additionally depend on the performance of the specific DFT methodology used in the simulations. A general DFT benchmarking for MOFs is, however, not in the focus of the present study. In fact, if alternative DFT-based approaches (relying, e.g., on different functionals or van der Waals corrections) were found to be more suitable for describing certain quantities, one could easily adopt them in the suggested parametrization process, as long as they were efficient enough for the active learning strategy. Other complications with experimental data are (i) that for many of the benchmarked quantities they do not exist and (ii) that at realistic experimental conditions MOF-based materials contain an often-unknown concentration of guest molecules and/or defects. Moreover, they typically consist of crystallites of limited size. This frequently makes the experimental determination of truly intrinsic materials properties of pristine MOF materials highly challenging.

To put the obtained agreement between the properties obtained with machine-learned potentials and with DFT into perspective, we also include a comparison to results obtained with UFF4MOF18,19,20, as an example for an off-the-shelf transferrable force fields widely used for modelling MOFs. As UFF4MOF has not been specifically parametrized for the considered materials, larger errors are to be expected. Still this comparison reveals, what level of improvement in the results can be expected from machine-learned potentials obtained via the suggested approach. It will also answer the question, whether calculating the above-mentioned quantities with force fields like UFF4MOF is sensible at all. In order to not ignore system-specifically parametrized traditional force field potentials, we will also provide a comparison to properties calculated using a MOF-FF32 potential variant, which has previously been parametrized for MOF-527 against static DFT calculations similar to those performed here.

Materials of interest

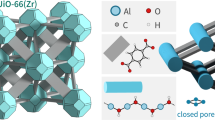

The systems of interest for the present investigation share two features: first, they are widely studied due to their high thermal stability and/or promising properties and, secondly, their unit cells are small enough that they are accessible to DFT simulations with properly converged numerical settings on contemporary supercomputers. Moreover, the chosen MOFs fundamentally differ in their topologies, shapes, dimensionalities, and flexibilities of the pores, and in the nature of the metal ions. Regarding the latter, we focus on closed-shell metal ions to avoid spin-order phenomena as an additional complication. The structures of the systems are shown in Fig. 1.

The following systems are considered: a MOF-5 (Zn), b UiO-66 (Zr), c MOF-74 (Zn), d large and e narrow pore phase of MIL-53 (Al). For the anisotropic systems MOF-74 and MIL-53 (np) side- and top-views are provided. The structures were visualized using the VESTA package119. Colour coding: Zn: brown, Zr: green, Al: blue, C: grey, H: white, O: red.

The first system is MOF-561 consisting of Zn4O nodes and 1,4-benzene-dicarboxylate (bdc) linkers. It forms a stable face-centred cubic structure with space group Fm-3m (225) containing 106 atoms in its primitive unit cell. Being one of the first published MOFs61, it has been intensively studied in the past, and is also included here as it is one of the very few MOFs for which reliable, single crystal thermal conductivity measurement are available67. The second material is UiO-6668. It consists of 12-coordinated ZrO based nodes connected by bdc linkers. Despite its different topology, the system still shows the same general symmetry as MOF-5 with space group Fm-3m (225). UiO-66 is one of the most commonly investigated MOFs69 primarily due to its exceptionally high thermal stability70. Another system of interest is MOF-7471, consisting of 1D extended metal-oxide pillar nodes connected by dobdc (2,5-dioxido-1,4-benzenedicarboxylate) linkers. They are aligned such that a honeycomb structure of hexagonally shaped 1D-extended pores is formed. This leads to a rhombohedral crystal structure with space group R-3 (148) with 54 atoms in the primitive unit cell. The system is known for its exceptional CO2 uptake72. MOF-74 exists for various metals including Ca, Mg, Zn, Mn, Fe, Co, Cu and combinations thereof73. Here, we picked the prominently investigated Zn variant. MOF-74 is particularly interesting due to its pronounced anisotropy. The fourth and final system is MIL-5374, a MOF consisting of metal-oxide node pillars in an octahedral arrangement connected by bdc linkers in the perpendicular direction forming a ‘wine rack’ shaped structure. This MOF is known to occur in two primary phases: a low temperature ‘narrow’ pore phase and a high temperature ‘large’ pore phase75. The large pore phase is orthorhombic with space group Imma (74), while the narrow pore phase is a monoclinic system with space group Cc (9)74. For the purpose of this work, we will consider both phases as separate systems, referring to them as MIL-53 (lp) and MIL-53 (np). MIL-53 has also been synthesized for a wide range of different metals. Here, we study the Al version of MIL-53, as one of the most commonly investigated variants76.

Overview of the simulations

All quantities discussed in the following were calculated for all MOFs with a VASP MLP, with an MTP of level 22 (and a radial basis-set size of 10). The bulk of the presented data are based on force fields trained on the initial set of reference configurations. Only when including the extended reference data had a significant impact, this will be discussed explicitly. In the main manuscript, the focus will be on the results for MOF-5 and MOF-74 as prototypical examples for an isotropic and an anisotropic MOF with data on other MOFs usually mentioned only briefly. The entirety of the results for all MOFs and all reference data sets are provided in the Supplementary Notes3.1, 3.3, and 3.5.

The reference data required for the training of the machine learned potentials were obtained using forces, stresses and energies calculated by density functional theory (DFT), as described above and in the Method section. This yielded between 739 and 998 reference configurations in the initial training, as summarized in Table 1 (together with the Bayesian error threshold at the end of the training). The latter is lower in MOF-5 compared to the other systems. This indicates a somewhat higher degree of accuracy when describing the forces for this system, which is confirmed when observing the evolution of the actual error during training (see Supplementary Note 2.1). The number of reference structures is in a similar range as for other machine-learned force field potentials for MOFs, including available accurate neural network potentials26,44 that either employ an incremental learning approach26, or that are trained on structures derived from ab-initio MD runs44.

Table 1 also contains the number of structures for the ‘extended reference data set’ discussed above. As can be seen in Table 1, for MOF-5, where the automatically set Bayesian threshold was already relatively low after the initial training, only 136 additional reference structures were added in the extended training run. In contrast, the number of structures in the datasets increased by a factor of two to three for the other systems, where the error threshold was higher initially. Further details on the training including the time evolution of the error threshold and the errors of the VASP MLPs compared to the training set are provided in the Supplementary Note 2.1.

Benchmarking: crystallographic unit cells

As a first step in the benchmarking of the obtained machine-learned potentials, their ability to predict unit-cell parameters is assessed. We start with an analysis of the unit-cell volumes. Overall, the agreement of cell volumes between the VASP MLPs, the MTPs, and DFT is excellent. This is evidenced by the relative deviations between force-field and DFT data plotted in Fig. 2a, b on a linear and on a logarithmic scale. For the isotropic systems MOF-5 and UiO-66 the relative deviation between both machine learned potentials and the DFT calculated volumes is below 0.2‰. For MOF-5, the error of the VASP MLPs is reduced even further by approximately two orders of magnitude. This is in stark contrast to the situation for UFF4MOF, where deviations amount to up to 14%. The errors of the machine learned force fields are somewhat larger for the anisotropic MOFs, but they are still comparably low (around 0.3%). Here, the errors of the UFF4MOF volumes are at least one order of magnitude larger. A similar trend emerges, when considering the unit-cell vectors, with negligible deviations for the isotropic systems and somewhat larger errors for the c-parameters in MOF-74 and MIL-53 (see Supplementary Note 3.1.1 and Supplementary Table 12).

For the DFT comparison in (a, b) 0 K optimized unit cells are considered, while for the comparison with experimental data in (c) the values at room temperature are shown (see main text for details). For all systems (MOF-5: blue, UiO-66: red, MOF-74: yellow, MIL-53 (lp): teal, MIL-53 (np): grey) the comparison of the volumes is shown for machine learned potentials trained on the initial reference data set. MTP results are displayed by strongly coloured bars, VASP MLPs results by lightly coloured bars, and UFF4MOF results by empty bars. The simulated volumes in (c) were obtained via NPT simulations at 300 K. They are compared to the average of the experimentally obtained volumes. The individual experimental results for each system are indicated as vertical lines in the respective colour.

When comparing the simulations to experimental unit-cell volumes, one has to keep in mind that the majority of the experiments for MOF-577,78,79,80, UiO-6668,80,81,82, MOF-7483,84,85,86, MIL-53 (lp)75,87,88 and MIL-53 (np)74,75,88 were performed at or around room temperature (around 300 K). Thus, for this comparison we did not merely optimize the geometries using the respective force fields but performed NPT simulations at 300 K for the machine learned potentials and for the UFF4MOF force field (for details see method section). Thus, here the force fields not only need to be able to correctly predict ‘0 K’ structures, but also need to capture the thermal expansion of the MOF crystals. As will be discussed in the section on Future Challenges, this is a particular challenge, as also for the underlying DFT approach modelling thermal expansion coefficients is a sizable challenge83. Moreover, there usually is a rather significant spread in the experimental data. A comparison with the average experimental cell volumes is contained in Fig. 2c. The deviations of the cell volumes of individual measurements from the average values are indicated by the horizontal lines. A summary of the experimental data sets and the simulated 300 K lattice parameters can be found in Table S18. Again, for most systems the values obtained with the MTPs and VASP MLPs are close to the values averaged over comparable experiments (with deviations clearly below 2%). Only for MIL-53 (np) the situation appears to be somewhat worse with an underestimation of the average experimental volume by nearly 5%. In this context, one, however, must note that also experimental values vary by ±4% around the average. For UFF4MOF the agreement is much worse also for the comparison to experimental cell volumes, as can be inferred from Fig. 2c.

Benchmarking: prediction of energies, forces, and stresses

The probably most important benchmark for the performance of the machine learned potentials is how well they describe total energies, forces, and stresses. To ensure that the potentials properly predict situations beyond the reference structures they were trained on, it is important to analyse their accuracy based on an independent set of validation data. Here, the validation set consists of 100 DFT computed displaced structures obtained via an active learning molecular dynamics simulation in VASP at a temperature of 300 K. The deviations of the force-field predicted total energies, forces, and stresses from the DFT simulations are shown in Fig. 3 for MOF-5 and MOF-74. In neighbouring panels, we, on the one hand, compare MTP with VASP MLP results and, on the other hand, MTP with UFF4MOF data, which necessitates very different scales. Corresponding plots for UiO-66, MIL-53 (lp), and MIL-53 (np) portraying a similar situation are contained in Supplementary Fig. 15. The energies and forces for the validation data set were criteria for the choice of the optimal MTPs amongst all generated ones (see above). Thus, in addition to the root mean square deviations (RMSDs) for the chosen MTPs, also average RMSD values for all trained MTPs are reported in parentheses. The individual RMSD values for each generated potential can be found in Supplementary Table 7.

Quantities obtained with the MTP (dark shading, all panels), VASP MLP (light shading; a, c, e, g, i, k) and UFF4MOF (no shading; b, d, f, h, j, l) have been subtracted from DFT reference data for the validation set. Deviations in energies relative to the situation at equilibrium geometry, ΔE, are shown in (a–d), differences in the components of the forces on individual atoms, ΔF, are shown in (e–h), and differences in the components of the stresses Δσ are contained in (i–l). The areas shaded in grey indicate the ranges with errors within 5% and 10% of the absolute DFT values for forces and stresses and within 1% for the energy differences. The RMSD values of the respective quantities for various FFs compared to DFT are noted in the panels, where for the MTPs the RMSD values for the ideal force field and the average RMSD values for all parametrized force fields (in parentheses) are provided. The figure contains only the data for MOF-5 and MOF-74, with the data for all other studied MOFs contained in Supplementary Fig. 15.

For the energies of the displaced structures relative to the equilibrium structures (first line of plots in Fig. 3), one observes an excellent performance of both machine learned potentials (panels a and c) where for the MTPs and VASP MLPs almost all deviations amount to <1% of the absolute values. Notably, for both machine learned potentials the largest differences in total energies for MOF-5 are around 0.3 meV(atom)−1, which is well below the chosen convergence criterion for the underlying DFT calculations, which amounts to 1 meV(atom)−1. In sharp contrast, for UFF4MOF the errors are more than two orders of magnitude larger with deviations of up to 50 meV(atom)−1 (see Fig. 3b).

For MOF-74, the MTP and VASP MLP errors are somewhat larger than for MOF-5, but also for this system they are two orders of magnitude below the UFF4MOF values. The trends for the maximum errors in energies are fully consistent with those of the corresponding RMSD values (see above).

For the forces in the second row of Fig. 3, the distributions of the errors are symmetric around zero. Consistent with the situation for the energies, the spread for the machine-learned potentials is similarly small for MTPs and VASP MLPs, while the transferrable force field at best displays a mediocre performance. For example, for MOF-5 the RMSD for the forces calculated with UFF4MOF amounts to 1.03 eVÅ−1, while it is only 0.02 eVÅ−1 for the MTP and the VASP MLP. In view of the particular relevance of force predictions, we considered also the Dreiding force field as an example for a traditional potential ignorant of MOFs in its parametrization. It performs even worse than UFF4MOF with an RMSD of 2.13 eVÅ−1 for MOF-5. These errors are severe considering that the mean absolute value of all the forces in the validation set for MOF-5 amounts to only 0.59 eVÅ−1. However, not all traditional force field potentials perform poorly. For example, employing a traditional, but system-specifically parameterized MOF-FF potential27 for MOF-5 one obtains a substantially improved RMSD of 0.09 eVÅ−1, which is an order of magnitude lower than the UFF4MOF value (albeit still larger than the values for the machine learned potentials). This (not entirely unexpectedly) suggests that the most important step in improving the performance of a force-field is its system-specific parametrization.

For the errors of the stresses in the last row of Fig. 3, we observe slightly larger values for the VASP MLPs than for the MTPs. This is possibly related to the details in the MTP training, where increased fitting weights for the stress contributions compared to the weights for the contributions of the forces were used. This was not done in the training of the VASP MLPs. For UFF4MOF, the error is again by two orders of magnitude larger. A peculiarity are the significantly larger stress errors for MOF-74 in Fig. 3k compared to MOF-5 in Fig. 3i for all force fields. This can at least in part be attributed to the absolute stress values being distinctly higher in MOF-74.

Notably, we observed a clear reduction of the force and stress errors of the VASP MLPs, when employing the extended reference data set in the parametrisation, such that the initial RMSDs for MOF-74 of 0.27 eVÅ−1 and 0.17 kbar decreased to 0.22 eVÅ−1 and 0.12 kbar, respectively. Conversely, for the MTPs the use of the extended reference data sets has only a minor impact on the errors for forces, energies and stresses (see Table S11).

Concerning the other investigated systems (see Supplementary Fig. 15 and discussion in Supplementary Note 3.1), for the cubic UiO-66 MOF the situation for force, stress and energy errors is comparable to the MOF-5 case, while the anisotropic MIL-53 behaves more like MOF-74 with somewhat higher deviations for forces and stresses.

Benchmarking: vibrational properties of the studied MOFs

After benchmarking the quality of the properties directly provided by the machine learned potentials, it is useful to assess derived quantities. Here, we will focus on vibrational properties, which for crystalline materials are determined by phonons. They crucially impact phononic heat transport, charge transport (via the strong electron-phonon coupling), and elastic properties. As a starting point, Fig. 4a–d compares the low frequency phonon band structures obtained with DFT and the MTPs for MOF-5, UiO-66, MOF-74 and MIL-53 (lp). In Fig. 4e, the comparison between the VASP MLP and DFT for MOF-5 is shown. Figure 4 does not contain data for MIL-53 (np), as for this system it has been challenging to compute a phonon band structure without imaginary modes when using DFT (see Supplementary Note 1.4 for details).

They were computed using the ‘ideal’ MTPs (a–d) trained on the initial reference data set (coloured lines) and employing DFT (black lines) for the following systems: MOF-5 in a, UiO-66 in b, MOF-74 in c and MIL-53 (lp) in d. The comparison between the VASP MLP and DFT for MOF-5 is given in panel e. Panel f contains for MOF-5 the comparison between UFF4MOF and DFT. High symmetry point names correspond to the space group dependent conventions detailed in ref. 120.

The low frequency region in the focus of Fig. 4 is insofar particularly interesting, as it contains the phonon modes most relevant for the above-mentioned transport properties as well as for the relative thermal stability of different phases. It is immediately evident from the plots in Fig. 4 (and in Supplementary Fig. 24 for most VASP MLP cases) that both types of machine learned potentials reproduce the DFT-calculated phonon band structures almost perfectly. Especially for MOF-5 the largest deviations amount to <5 cm−1, with this excellent agreement persisting for UiO-66 for the MTPs. For MOF-74 and MIL-53 (lp), we see some larger deviations for individual phonon bands, however, especially for the MTPs the agreement is still extremely good and outperforms the results for any traditional force field potentials that we are aware of by a large margin. As shown in Supplementary Fig. 24, for the VASP MLPs, the agreement for MIL-53 (lp) and UiO-66 is slightly worse than for the MTPs, but can be significantly improved using the extended reference data set (see Supplementary Note 3.5).

The good agreement between force field and DFT-calculated phonon band structures is lost when employing UFF4MOF, which is illustrated for MOF-5 in Fig. 4f. The situation becomes even worse for MOF-74, as illustrated in Supplementary Fig. 26. UFF4MOF substantially overestimates the dispersion of the acoustic modes, which results in too high group velocities. This is one of the origins of the severely overestimated thermal conductivity for MOF-5 when calculating it with UFF4MOF27. Another peculiarity of UFF4MOF is that it yields a severe overestimation of the frequencies of virtually all optical bands.

While the agreement of the phonon band structures is very reassuring, Fig. 4 displays only the situation for the low frequency phonons. As plotting the band structures over an extended frequency range would not be useful due to the sheer number of bands, in Fig. 5 the phonon densities of states (DOSs) calculated with the force fields are compared to those obtained with DFT for MOF-5 and MOF-74 (data for the other MOFs can be found in Supplementary Fig. 16). In the left panels, phonon DOSs in the same low frequency region covered also in Fig. 4 are displayed, while the right panels show the DOSs for the entire frequency range in which phonons exist in the studied materials. As expected from the preceding discussion, for both the MTPs and the VASP MLPs in the low frequency region one sees mostly minor deviations from the DFT data, which are somewhat more significant for MOF-74 than for MOF-5. Interestingly, this trend also prevails, when plotting DOSs for the full frequency range. The situation deteriorates for UFF4MOF, where it becomes virtually impossible to correlate specific features in the DOSs calculated with the force field with those calculated with DFT. Additionally, UFF4MOF massively underestimates the magnitude of the frequency gap found in the DFT simulations between 50 THz and 90 THz.

Comparisons of phonon DOSs are shown for DFT calculations (black lines) and the force field potentials (shaded areas/coloured lines) for the low frequency phonon modes in (a, c) and for the entire phonon spectrum in (b, d). The plots contain the comparisons for MOF-5 (a, b, blue) and for MOF-74 (c, d, orange) for the MTP, the VASP MLP, and the UFF4MOF. The DOSs were obtained for 11×11×11 q-point meshes used to sample the first Brillouin zone and a Gaussian smearing is applied to broaden the modes. The width of that smearing is set to 0.05 THz for the low frequency region in (a, c) and to 0.5 THz for the entire frequency range in (b, d).

To quantify the quality of the description of phonons by the three types of force fields, Table 2 lists the RMSDs of the Γ-point frequencies for all materials in the low-frequency and in the full frequency ranges. Finally, for MOF-5, Table 2 also contains the results for the MOF-FF force field. Especially in the low frequency region, an excellent agreement with the DFT data is achieved for all machine learned potentials with only single digit wavenumber errors between 1.1 and 3.9 cm−1. Even for the full frequency range and when using only the initial training set, MTP RMSD values for most systems are around an amazing 3 cm−1 with a maximum of 7.6 cm−1 for MIL-53 (np). For the VASP MLPs, deviations in the entire frequency range are somewhat larger ranging from 4.2 to 10.4 cm−1. However, especially for the VASP MLP, the frequency error can be improved substantially when extending the reference data set, as shown in the Supplementary Note 3.1. The MOF-5 frequency error for the conventional and system-specific MOF-FF potential is substantially higher than for the machine-learned counterparts. However, MOF-FF still performs better by more than one order of magnitude compared to UFF4MOF, where the frequency RMSDs can reach several hundred wavenumbers over the entire frequency region.

Benchmarking: elastic properties of the MOFs

Another relevant quantity related to the properties of acoustic phonons (via the Christoffel equation89) is the elastic stiffness tensor, C. The symmetry-inequivalent elements of C obtained from DFT and from the force field potentials are shown in Fig. 6 for MOF-5 and MOF-74. For the MTPs the agreement is close to ideal for both materials with maximum deviations of 1 GPa. For the VASP MLPs, the situation in MOF-5 is similar but in MOF-74 the agreement is slightly worse. Again, it can be improved by considering the extended reference data set. The lower degree of accuracy for MOF-74 is presumably caused by the more demanding description of the elastic constants in that highly anisotropic system90. Additionally, as mentioned already in the context of the stresses on strained cells discussed above, the MTPs were trained with higher relative weights for the stress contributions compared to the forces, which could explain the improved level of accuracy in the MTP-calculated elastic constants.

Shown are the tensor elements \({{\rm{C}}}_{{\rm{i}},{\rm{j}}}\) calculated with DFT and with the MTPs in (a, b), with DFT and with the VASP MLPs in (c, d), as well as with DFT and UFF4MOF in (e, f). The plots shown here contain data for MOF-5 in (a, c, d, blue) and MOF-74 in (b, d, f, orange) with equivalent plots for the other studied MOFs provided in Supplementary Fig. 17. In the upper and central panels, small filled symbols indicate values obtained with potentials trained on the initial reference data set and large empty symbols refer to the values for potentials trained on the extended reference data set. The solid black lines pass through the origin and have a slope of 1 such that they indicate an ideal agreement between force field and DFT simulations.

Not unexpectedly, the performance of UFF4MOF is much worse. An extreme example is the C33 constant in MOF-74, which when using UFF4MOF is calculated to be >5 times as high as in the DFT reference (see Fig. 7f). Interestingly, the bulk modulus for MOF-5 calculated via the Voigt average form the components of the stiffness tensor is rather similar when calculated with UFF4MOF (16.8 GPa) and with DFT (16.1 GPa). These values are consistent with the literature14, but considering that the individual components of the stiffness tensor differ substantially between the two approaches, the seemingly good agreement for UFF4MOF for the bulk modulus has to be attributed to a fortuitous cancellation of errors.

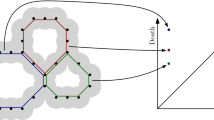

In a the RMSDs of the forces for the validation set between DFT and various force-field approaches are shown as a function of the CPU time used per time step and atom for MOF-5. Data points are included for: MTPs at various levels trained with 7 atom types (blue squares), a level 22 MTP trained with only 4 atom types (blue diamond), two variants of the VASP MLP trained with 4 atom types (red square) and with 7 atom types (purple square), a system-specifically trained MOF-FF variant33 (green triangle), and the transferable UFF4MOF19 (pink star) and Dreiding17 force fields (orange down triangle). The speed was obtained by performing an MD simulation in the NPT ensemble with further details provided in the main text. In b, the impact of including the separation of atom types on the validation set force RMSD is shown for the MTP and the VASP MLP for MOF-5 and MOF-74. c contains the values of force RMSDs and CPU times for different variants of the VASP MLPs for MOF-5 and MOF-74. The colour coding refers to the number of used atom types when training the potential (purple symbols: 7 atom types for MOF-5 and 8 for MOF-74; red symbols: 4 atom types). Inverted triangles indicate potentials trained on the extended reference data set, all other potentials were trained on the initial reference data set. Empty symbols indicate MLPs obtained retraining the potential using the default settings of VASP 6.4.1 and filled symbols represent MLPs obtained with adjusted settings. Symbols on the left side (’refit’ bracket) indicate MLPs after a refit designed for production runs. Additional variation in the settings comprise: increasing the maximum number of local reference configurations from 1500 to 3000 for the 4 typed potential (\({{\rm{N}}}_{{\rm{B}}}^{\max }\), diamond) and reducing the cutoff of the angular descriptors from 5 to 4 (\({{\rm{R}}}_{{\rm{cut}}}^{{\rm{a}}}\), pentagons). In the lower left circle, results for MOF-5 are shown, while the rest of the panel only contains data for MOF-74. The CPU time used was tested for a 31104 atom sized supercell of MOF-74 with otherwise the same settings as for MOF-5.

The numerical values of the tensor components as well as plots for UiO-66 and MIL-53 are contained in Supplementary Note 3.1.4. The situation for the machine learned potentials is again comparable to that in MOF-5 and MOF-74 with deviations being largest for MIL-53 (np) with an RMSD for all tensor elements of 1.2 GPa. However, this is still quite very good, given the higher absolute values of the tensor elements in that material.

Benchmarking: thermal conductivity

As a final benchmark quantity, we consider the thermal conductivity. It is again intimately related to phonon properties, where now also anharmonic effects play a decisive role. As calculating the thermal conductivity of MOFs employing DFT is far beyond present computational possibilities, the comparison is restricted to experimental data and here to MOF-5, as to the best of our knowledge this is the only materials amongst the ones studied here for which high-quality single crystal data exist. According to ref. 67, the experimental room temperature value of the thermal conductivity of MOF-5 amounts to 0.32 W(mK)−1 and it has already been shown that the UFF4MOF and the Dreiding force fields fail in reproducing that value (yielding thermal conductivities of 0.847 W(mK)−1 and 1.102 W(mK)−1, respectively), while the system-specifically parametrized MOF-FF variant provides a sensible value of 0.29 W(mK)−127. Here, non-equilibrium molecular dynamics simulations for MOF-5 were performed using a level 18 MTP. Notably, for these simulations we used a lower level of the MTP to reduce the computational cost of correcting for finite size effects, which requires simulations for various supercell lengths91,92. This is, however, not expected to have a major impact, as the difference in the uncorrected thermal conductivities for selected cell lengths between level 22 and level 18 MTPs are minimal as shown in Supplementary Note 4.2. The resulting thermal conductivity obtained from NEMD simulations for cell lengths ranging from 208 to 416 Å perfectly reproduces the experiment, yielding a value of 0.32 W(mK)−1. As a technical detail, it should be mentioned that this result has been obtained applying the traditional approach of determining the temperature gradient in the NEMD simulation in the region of a linear temperature decrease. When instead employing the method of Li et al., determining the temperature gradient from the temperature difference between the hot and cold thermostats91 (which we used previously27,33), a somewhat lower value of 0.26 W(mK)−1 is obtained. Therefore, we also employed the ‘approach to equilibrium molecular dynamics’, AEMD, method as a complementary molecular dynamics-based strategy for obtaining thermal conductivities. This again yielded a value of 0.32 W(mK)−1, confirming the excellent agreement of the MTP simulations with the experimental data. A full discussion und further details regarding the heat transport simulations are provided in Supplementary Note 4.2. At the time of the calculations for this paper, NEMD or AEMD simulations were not accessible using VASP, hence no comparison between the VASP MLPs and MTPs can be provided for the thermal conductivity.

Future challenges

While the above discussion highlights the superior performance of the considered machine learned potentials for predicting various observables, we will next discuss thermal expansion as a process for which the performance of the machine learned potentials appears to be less satisfactory. In the case of thermal expansion, one again needs to compare the calculated thermal expansion coefficients to experimental data, as simulating the thermal expansion of MOFs with DFT is extremely challenging and prone to errors (see discussion in the Supporting Information of ref. 83). As far as the available experimental data are concerned, some of the issues were already mentioned in the context of the crystallographic unit cells at 300 K for the narrow pore phase of MIL-53, where the experimental results also show a substantial spread. The situation is also problematic for MOF-74, where our own measurements yielded only very minor changes of the unit-cell volume with temperature83,93. In view of these uncertainties, thermal expansion shall only be briefly discussed in the following, with significantly more details provided in Supplementary Note 4.1.

For example, for MOF-74, the MTPs/VASP MLPs predict a very small positive (1.6 ∙ 10−6 K−1 and 1.2 ∙ 10−6 K−1/2.0 ∙ 10−6 K−1 and 6.9 ∙ 10−6 K−1) thermal expansion coefficient in linker directions instead of a very small negative value (−2 ∙ 10−6 K−1) suggested by the experiments. Due to the generally limited accuracy of the experimental and computational methods this difference might still be acceptable. What is, however, more concerning is that along the pore direction, the MTPs/VASP MLPs predict a thermal expansion value of 31.0 ∙ 10−6 K−1/25.3 ∙ 10−6 K−1, which is nearly an order of magnitude larger than the experimental result of ~4 ∙ 10−6 K[−183.

There are, however, also cases in which the agreement between simulations and experiments is better, like for MOF-5, where the simulated thermal expansion coefficients of −11.3 ∙ 10-6 K−1 for the MTP and −11.5 ∙ 10-6 K−1 for the VASP MLP are rather close to the experimental values, which range between −13.1 ∙ 10−6 K−1 and −15.3 ∙ 10−6 K−178,79,94. As already mentioned above, at this point it is unclear, whether the varying quality of the agreement between theory and experiments arises from the machine learned potentials, or whether the problems are at least in part inherent to the DFT approach used for the reference data generation. An indication that the chosen DFT methodology does have a significant impact is that for MOF-74 it has been observed that when applying the numerically more stable mode Grüneisen theory of thermal expansion, the sign of the thermal expansion coefficient in linker direction would actually depend on the chosen functional (PBE vs. PBEsol)83.

The other major challenge when applying the MTP approach to MOFs is to train the potential such that a force field is obtained that is fully stable for the targeted simulation conditions. Stable here means that the system in question remains structurally intact also over hundreds of thousands (or even millions) of molecular dynamics time steps that one would, for example, apply when performing NEMD simulations. A structural disintegration of the material is not possible for simple force field potentials characterized, e.g., by harmonic bonding potentials, but for approaches based on mathematically more complex models, like machine learned potentials, this can become a problem. This is especially true when in the stochastic description of the atomic motion at finite temperatures large displacements occur for which the potential has not been sufficiently well trained.

Improving the long-term stability of the force fields was one of the main motivations for increasing the final temperature in the on-the-fly training runs to 900 K. Nevertheless, not all trained force fields turned out to be stable over hundreds of thousands of time steps even at room temperature. Notably, the situation turned out to be significantly more problematic for the MTPs than for the VASP MLPs, as discussed in detail for the MTPs in Supplementary Note 2.2. We attribute the less satisfactory performance of MTPs in the context of thermal stability to their poorer ability to generalize compared to GAP-type potentials, as already suggested previously54. This has been attributed to the unregularized global basis functions of the MTP54. The MTPs for MIL-53 were particularly problematic, as the material features a comparatively flat energy landscape. One possibility to mend this problem was to train several MTPs using the same reference data. In that case, due to variations originating from the stochastic nature of the initialization of the training procedure, some potentials turned out to be substantially more stable than others. However, this is not an ideal solution, as additional extensive MD test runs would be required for each MTP to judge the thermal stability. Moreover, training a large number of accurate MTPs is computational costly. Notably, also the extended reference data set obtained based on the molecular dynamics simulations at 300 K did not substantially improve the situation. However, adding 1009 DFT reference structures based on active learning runs at 400 K at a reduced error threshold did result in MTPs for MIL-53 (lp) that were all stable at least up to room temperature.

These results suggest that at least under certain circumstances adding more strongly displaced reference structures obtained by higher-temperature learning steps helps overcoming the problem of long-term stability of the force field. Unfortunately, this is associated with a significantly increased learning effort, where additional reference data beyond the first 500–1000 structures do not substantially improve the accuracy of the MTPs. Additionally, for MTPs it could be beneficial, to further improve the data sampling approach or to augment the force fields with potential terms that prevent a structural disintegration of the studied system.

In general, the stability issues are much less pronounced for the VASP MLPs, which use a basis set more suitable for generalization to unknown situations. Based on our stability tests in an NPT ensemble, all VASP MLPs trained on the initial reference data set including atom type separation were stable up to 700 K, which is the maximum temperature included in the tests (see also Supplementary Note 4.1.2). Still, at very high temperatures some most likely unphysical cell deformations took place for some of the systems, but at least the structure never disintegrated.

Evaluating the efficiency of the potentials

So far, the discussion focused on the precision of the machine learned potentials, demonstrating that they show an excellent performance when compared to DFT data. What has not been touched upon so far is an evaluation of the computational efficiency of the potentials. Here, the MTPs allow a straightforward adjustment of the number of parameters of the potentials and, thus, of their numerical efficiency. The primary hyperparameter to tune in this context is the so-called ‘level’ of the MTP, which controls the degree to which many-body interactions are included and, thus, substantially impacts the number of parameters. Consequently, it has a much more profound impact on both precision and performance than the number of radial basis functions, which, thus, has been set to 10 for all presented data. The other setting of the MTPs that substantially affects the computational efficiency is the cutoff for the interactions. However, if one deviates too much from the default value of 5 Å (chosen also here), the precision of the potentials deteriorates severely. Therefore, using the same reference data as above we systematically varied the level of the MTPs from 10 to 24 and compared the accuracy (in terms of force RMSDs) and the speed of the force fields in Fig. 7. In panel a, the computational speed of the machine learned potentials is correlated with their accuracy and compared to more traditionally force fields for MOF-5. For this comparison we use three different types of conventional potentials: a system-specifically parameterized MOF-FF potential for MOF-5 used in our previous work32,33, the transferable universal force field18 extended for MOFs (UFF4MOF)19,20, and the transferable Dreiding17 potential. For the VASP MLPs, panel (a) contains only the data for the force field refitted on the initial reference data set, as described in the computational approach section (using VASP 6.4.1). The computational speed was evaluated for NPT simulations on 4 × 4 × 4 conventional supercells of MOF-5 carried out on 64 cores of a dual socket AMD EPYC 7713 (Milan) node of the supercomputer VSC-5 (see https://vsc.ac.at/systems/vsc-5/). It should be mentioned that differences in the speeds of the force fields are caused by differences in scaling with the number of nodes (see Supplementary Note 4.1.4) and can be affected by using different libraries when compiling the VASP and the LAMMPS codes. Additionally, we stress that the tests performed here are far from comprehensive and are restricted to the force fields considered in this study. There certainly are other options, like, for example, the GPUMD48 force fields, for which a very high speed at good accuracy has been reported for MOFs44.

The traditional UFF4MOF and Dreiding potentials are the most computationally efficient options, but they also suffer from a poor description of the forces with RMSD values above 1 eVÅ−1. As discussed already above, the system-specifically parameterized MOF-FF potential hugely improves the situation with an RMSD value of 0.09 eVÅ−1. However, the more complex functional form including cross terms and an optional, more accurate treatment of long-range electrostatic interactions via a particle-particle particle-mesh solver95 slows down MOF-FF by a factor of ca. 5 compared to the more simple force fields.

Significantly more accurate are MTPs, where the highest levels show the best degree of accuracy among the tested potentials for MOF-5 with a force RMSD of 0.016 eVÅ−1. Interestingly, reducing the level of the MTP from 24 to 10 yields a speedup by a factor of 28, but at the same time less than doubles the force RMSD (to 0.033 eVÅ−1) such that the level 10 MTP still clearly outperforms MOF-FF. In fact, that MTP is computationally only about twice as expensive as UFF4MOF, but with a force RMSD reduced by a factor of 34. For the level 22 MTP, we also tested the performance without separating the atom types: as can be clearly seen in Fig. 7a, this has hardly any impact on the computational efficiency, but decreases the degree of accuracy (with the force RMSD increasing from 0.016 eVÅ−1 to 0.021 eVÅ−1 for 7 and 4 atom types, respectively).

The VASP MLPs are of a similar accuracy as the mid- to high-level MTPs, but they are at least a factor of 2–3 slower than the highest level MTPs. At least in part this can be attributed to the rather unfavourable scaling of the parallelization of the molecular dynamics part of VASP, as shown in Supplementary Note 4.1.4. For example, when using only up to 16 cores, the VASP MLP with 4 atom types is only about 50% slower than the level 22 MTP. We expect this scaling to be improved in future implementations.

Figure 7a also shows that the VASP MLP with 7 atom types is about a factor of 2 slower than the one with 4 types while only offering a moderately increased degree of accuracy. Conversely, the gain when considering more atom types in the VASP MLP is much more significant for MOF-74, as shown in Fig. 7b. Thus, in the case of the VASP-generated potential, whether or not it is useful to consider more atom types is system dependent. As indicated already above, the situation for the MTPs is quite different: there, the benefit of considering more atom types is generally larger, while the additional computational cost is negligible. Thus, for MTPs differentiating between atoms in chemically different environments is definitely advisable for the considered systems. We tentatively attribute this difference between the MTPs and VASP MLPs to the fact that a larger number of atom types significantly increases the number of adjustable parameters in MTPs, while in VASP MLPs the size of the basis set is adjusted dynamically, which at least in part anticipates the benefit of more atom types for the accuracy.

In the course of our work, we learned that the speed and accuracy of the VASP MLPs retrained employing the VASP 6.4.1 default settings clearly outperformed VASP MLPs trained with the default settings of earlier versions of the code both in terms of speed and especially accuracy. The latter resulted primarily from including a larger number of local reference configurations in a reselection process (datapoints below the ‘select’ bracket, which serve as the reference data for the following comparison). A more in-depth discussion of the dependence of the performance of the VASP MLPs on the version of the code can be found in Supplementary Note 3.7. Another factor hugely impacting the speed of the VASP MLP is whether or not the live error estimation in the VASP MLP is performed. As shown in Fig. 7c, when this is not the case (like for the potentials used to generate the symbols under the ‘refit’ bracket), the computational cost is reduced by about two orders of magnitude. For the ‘refit’ calculations also the accuracy of the VASP MLP is slightly improved due to the use of a singular value decomposition approach96, when refitting the parameters (in contrast to the less accurate but more efficient LU factorisation during training). When additionally increasing the maximum number of reference configurations retained in the VASP MLP (red diamond), a higher level of accuracy is achieved, albeit at the cost of a reduced computational speed. To again improve the speed of the potential, reducing the radial cutoff of the angular descriptors appears to be a viable option. However, in the performed tests, (see pentagons) the difference compared to choosing the default value is only minor. The most accurate VASP MLP for MOF-74 is achieved combining a large number of atom types with an extended reference data set (see purple down-triangle). Overall, for the tests under the 'refit' bracket one observes that, whenever one increases the accuracy of the force prediction by accordingly setting one of the rather diverse parameters explored for the VASP MLPs, this also decreases the overall computational speed.

Discussion

In summary, in this work we describe a strategy for obtaining machine learned potentials that allow the description of the properties of MOFs at essentially DFT quality with hugely reduced computational costs. This strategy uses the active learning approach of VASP56,97 for sampling the configuration space and for generating reference structures. On these reference data, machine learned potentials can already be trained in VASP, which are highly accurate and efficient (especially when using the latest releases of the code). The resulting force fields are nearly two orders of magnitude more accurate, but also clearly slower than traditional potentials like UFF4MOF. If speed is the priority, the effective reference data generation procedure of VASP can be combined with the numerical efficiency and also high accuracy of moment tensor potentials (MTPs)51,58. They allow flexible adjustments to their speed and accuracy by manually adjusting the size of the basis set leading to potentials, where the faster variants are only less than an order of magnitude slower than traditional transferable potentials while still maintaining an exceptionally high degree of accuracy. As all necessary codes (the VASP and MLIP software packages) are readily available, a general use of the proposed strategy should be comparably straightforward.

A systematic comparison of predicted forces, energies, stresses, phonon band-structures, and thermal transport coefficients shows that the such-trained MTPs and VASP MLPs yield a truly amazing agreement with DFT reference data (as the primary benchmark quantities) and with experimental data in cases where DFT data are not available. The said tests were performed for a representative selection of isotropic as well as anisotropic MOFs including MOF-5, MOF-74, UiO-66, and the narrow and large pore phases of MIL-53. Compared to transferrable force fields like UFF4MOF the improvements in prediction quality is enormous with errors for certain quantities dropping by several orders of magnitude and this at only very moderately increased computational costs. Only when attempting to describe thermal expansion processes, distinct deviations between the machine learned potentials and experiments are observed, where there are some indications that in that case the reference DFT methodology might be at least partly at fault. Notably, in the case of MTPs for some systems also the thermal stability of the force fields needs to be further improved. This can become an issue when performing extended molecular dynamics runs comprising hundreds of thousands of time steps, but for the systems considered here the problem can be mediated by including reference data from dedicated high temperature runs. The VASP generated potentials suffer substantially less from issues of thermal stability. This makes them a highly promising option especially for flexible materials with flat potential energy surfaces.

Overall, the data presented here and other recent advancements in MOF force field development26,44 clearly point towards machine-learned potentials as becoming a game-changer for the modelling of this complex class of materials. This particularly applies to the simulation of dynamical MOF properties, which are often computationally not accessible to ab-inito methods like DFT but for whose description traditional force fields are either too inaccurate or too tedious to parametrize. Here, the use of VASP MLPs or MTPs can speed up the simulations by many orders of magnitude keeping essentially the accuracy level of the reference method used in their parametrization.

Methods

In this section, we will discuss the most important settings and technical details relevant for this work. A more detailed comparison of the nature of MTPs and VASP MLPs is also provided. Additional technical information and convergence tests are contained in the Supplementary Information.

The DFT approach

For the required reference ab-initio calculations, density functional theory (DFT) simulations were performed with the Vienna ab-initio Software Package (VASP)98,99,100,101,102,103 employing the Perdew-Burke-Ernzerhof (PBE) functional104,105 and applying Grimme’s D3 correction with Becke-Johnson damping106,107 to account for dispersive forces. For all training runs a plane-wave energy cutoff of 900 eV was chosen after careful convergence tests based on total energies, vibrational frequencies and elastic moduli. The energy convergence for the self-consistent field approach was set to 10-8 eV. The structures for each system were optimized until the maximum absolute force component in the system reached at least <10-3 eVÅ−1 using a quasi-Newton optimization algorithm. Further details on the VASP settings including the system-specifically chosen k-space sampling mesh are provided in Supplementary Note 1.

Functional form of the machine learned potentials

This section introduces aspects regarding the functional form of the potentials that are relevant for understanding the fundamental differences between the kernel-based VASP MLPs and the moment tensor potentials. It also summarizes the chosen settings used throughout this work. A comprehensive description of the VASP MLPs and of the MTPs beyond specific aspects relevant to assess the results presented in this work can be found in refs. 51,56.

The energies for each atom Ei in the VASP MLPs56 are computed from kernels K which represent a measure for the similarity between local reference configurations, iB, and the local configuration of interest, i.

The kernel is built from the expansion coefficients of the radial and angular descriptors containing information regarding the geometry of the local configurations. The descriptors are defined up to a certain cut-off, which was set to 5 Å during active learning. For the reselection of the reference configurations and subsequent refits, the cut-off for the radial descriptors was set to 8 Å. The weights, wiB, represent the parameter that needs to be trained for each local reference configuration iB. This is done not only for the energies but also for the forces and stresses using the derivatives of the kernel with respect to the atom positions and cell parameters. Notably, the number of local reference configurations, iB, increases with the number of reference structures contained in the training set (up to a maximum value).

Conversely, the basis set of the MTPs51 is built from moment tensor descriptors, M, describing the neighbourhood, n, around an atom i:

These descriptors are constructed from a radial part fμ and an angular part represented by a series of ν outer products of the distance vector rij. The radial part consists of a sum of radial basis functions defined up to a distance cut-off for each pair of atom types zi and zj, where the primary component is a polynomial, Qβ, up to a maximum order NQ. This number is usually referred to as the number of radial basis functions. This means that NQ parameters \({c}_{\mu ,{z}_{i},{z}_{j}}^{\beta }\) are associated with the radial part of the descriptor:

The indices μ and ν define the ‘level’58 of the MTP with \({\rm{level}}\left({M}_{\mu ,\nu }\right)=2+4\mu +\nu\). The level specifies, which combinations of moment tensor descriptors are included to form the basis functions Bα, which then lead to the energy of an atom around a neighbourhood using

Thus, the parameters that need to be trained are the radial parameters \({c}_{\mu ,{z}_{i},{z}_{j}}^{\beta }\) for each atom pair and value of μ and the parameters \({\xi }_{\alpha }\) for each basis function. The primary difference compared to the VASP MLPs is that here the number of parameters does not depend on the amount of training data and can be adjusted freely based on the needs of the user by setting the values for the level and NQ. Since especially the angular component of the moment tensor descriptors can be reused for many different basis functions, a high level of computational efficiency is maintained.

On-the-fly training of the VASP MLPs

As indicated in the previous section, the energy, force and stress weights for each of the basis functions in a VASP MLP need to be trained on the reference data. For this, the reference data are generated on-the-fly in an active learning approach, which is based on molecular dynamics simulations. These were carried out using a time step of 0.5 fs. The friction coefficient for the Langevin thermostats and barostats was chosen to be 10 ps−1 and a fictious mass of 1000 amu was assigned to it for all systems, as required for the Langevin barostat method of Parrinello and Rahman108. In the initial training runs, the temperature was set to gradually increase from 50 K to 900 K over 50,000 time steps. To generate the independent test sets to validate energies, atomic forces and stress tensors of the individual force field potentials, another set of completely independent on-the-fly machine learning force field runs was performed from scratch in an NPT ensemble at 300 K with a time step of 0.5 fs. These runs continued until 100 DFT calculations had been performed, which then served as system-specific test sets. In the context of generating the test set, the machine learning approach was used primarily to improve the sampling of phase space compared to, e.g., picking every nth configuration from a conventional ab-initio MD run.

The complexity of the selection and training procedure evidently leads to a number of parameters, whose choice leaves a large room for optimizations. This is also reflected by advancement of the VASP code, where version 6.4.1 led to significant improvements over version 6.3.0. Since the training runs for this work were carried out with version 6.3.0, the local reference configurations were reselected with version 6.4.1 with the ML_MODE=select setting. Therefore, when discussing actual numerical values for the parameters in the following, we give them for the training run and for the situation after the reselection of local configurations in all cases in which they differ (see also the Supplementary Note 3.7 and ref. 56 for more details).